Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

What just happened? Apple has been slow to adopt generative AI, but that might be changing with the introduction of MM1, a multimodal large language model capable of interpreting both image and text data. This functionality could potentially be included in the company’s next generation of handsets and services although there are also rumors of Apple integrating Google’s Gemini AI.

Apple researchers have developed MM1, a new approach for training large language models (LLMs) that incorporate both textual and visual information. MM1 is part of a family of multimodal models that includes up to 30 billion parameters, utilizing a dataset comprising image-caption pairs, interleaved image-text documents, and text-only data, according to a paper published by the researchers.

Multimodal Large Language Models (MLLMs), they explain, are large-scale foundational models that process image and text data to produce textual outputs. “After the rise of LLMs, MLLMs are emerging as the next frontier in foundation models,” they note.

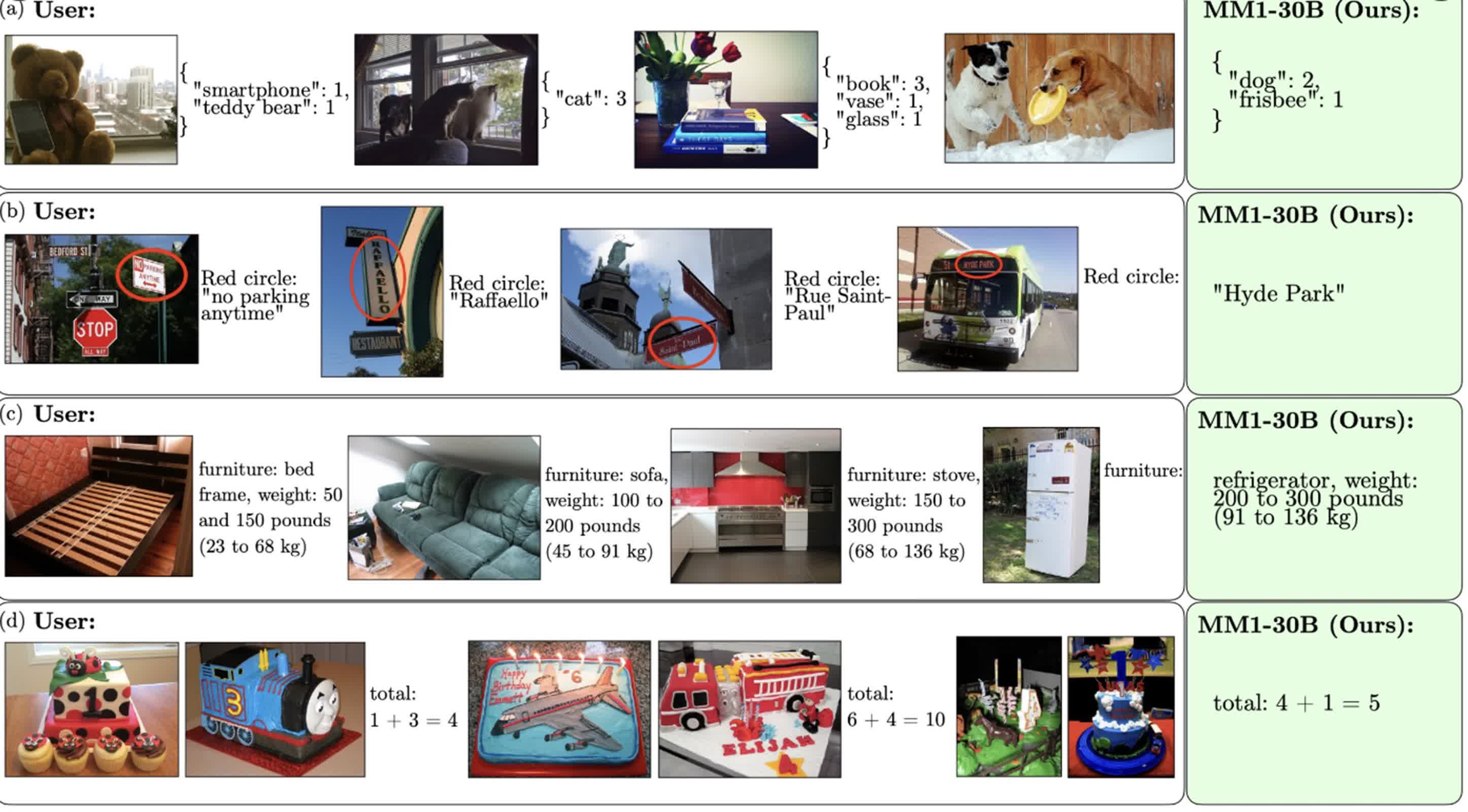

Apple claims that MM1 is capable of counting objects, identifying parts of images, demonstrating common-sense and word knowledge about everyday objects, and performing basic mathematical functions. It also supports in-context learning, meaning the model doesn’t need retraining or fine-tuning for each query but rather is able to understand the query based on the context provided. It also boasts multi-image reasoning, which allows it to interpret and draw conclusions from multiple images.

Apple provides the example of a user showing the model a photo and asking it how much he would have to pay for all the beer on the table based on the price on the menu.

The model responds by noting that while the image shows a menu with beer prices, it’s not entirely clear which specific beers are on the table. However, it tells the user, it can provide an estimate based on the visible prices. Its answer: “From the menu, it appears that the prices for beer are as follows: Magna: 5, Medalla: 5, Heineken: 6 Presidente: 6 Assuming that the beers on the table are Magna, Medalla, Heineken, and Presidente, and there are two of each, you would pay: 2 x Magna: 2 x 5 = 10, 2 x Medalla: 2 x 5 = 10, 2 x Heineken: 2 x 6 = 12, 2 x Presidente: 2 x 6 = 12 Total: 10 + 10 + 12 + 12 = 44.”

MM1 is “just the beginning,” according to Apple senior research engineer Brandon McKinzie, who is working on multimodal models. He also said that Apple is “already hard at work on the next generation of models.”

This is just the beginning. The team is already hard at work on the next generation of models. Huge thanks to everyone that contributed to this project!

– Brandon McKinzie (@mckbrando) March 15, 2024

News of MM1 comes amid a report that Apple is currently in negotiations to license Google’s Gemini AI tech for use in the next iPhone series. The partnership would give Google Gemini a wider audience and help Apple gain access to some of the most bleeding-edge generative AI tech available.

These negotiations also hint that Apple’s AI initiatives might not be progressing as swiftly as hoped. Apple has been the most cautious among the tech giants in adopting generative AI, preferring to wait for the market to mature slightly before making a commitment.

The unveiling of MM1 opens up new possibilities for Apple’s next generation of services. It is conceivable that MM1 could be integrated into Siri 2.0, enabling it to answer questions based on images. Additionally, iMessage could be enhanced with the new model, offering users more precise response suggestions based on shared images.